Emerging cloud applications like machine learning, AI and big data analytics require high performance computing systems that can sustain the increased amount of data processing without consuming excessive power. Towards this end, many cloud operators have started adopting heterogeneous infrastructures deploying hardware accelerators, like FPGAs, to increase the performance of computational intensive tasks. However, most hardware accelerators lack of programming efficiency as they are programmed using not-so widely used languages like OpenCL, VHDL and HLS.

According to a survey from Databricks in 2016, 91% of the data scientists care mostly about the performance of their applications and 76% care about the easy of programming. Therefore, the most efficient way for the data scientists to utilize hardware accelerators like FPGAs, in order to speedup their application, is through the use of a library of IP cores that can be used to speedup the most computationally intensive part of the algorithm. Ideally, what most data scientists want is better performance, lower TCO and no need to change their code.

InAccel, a world leader is application acceleration, has released the new version of the Accelerated ML suite that allows data scientists to speedup the Machine learning applications without the need to change their code. InAccel offers a novel suite on AWS that can be used to speedup application for Apache Spark MLlib in the cloud (AWS) with zero-code changes. The provided platform is fully scalable and supports all the main new features of Apache Spark like pipeline and data Frames. For the data scientists that prefer to work with typical programming languages like C/C++, Java, Python and Scala, InAccel offers all the required APIs on AWS that allow the utilization of FPGAs in the cloud as simple as using a programing function.

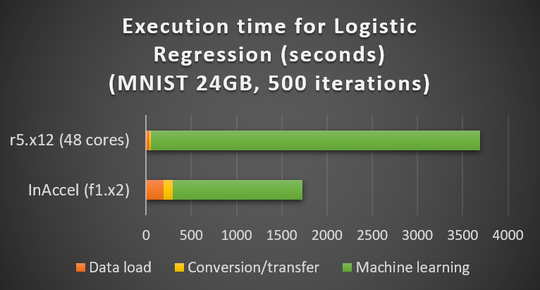

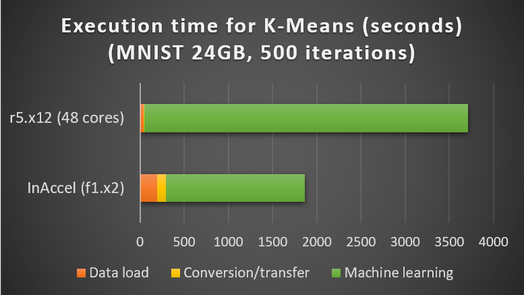

Currently, InAccel offers two widely used algorithm for Machine learning training: Logistic Regression BGD and K-means clustering. Both of these algorithms were evaluated using the MNIST dataset (24 GBytes). The performance evaluation for 100 iterations showed that InAccel Accelerated ML suite for Apache Spark can achieve over 3x speedup for the machine learning and up to 2.5x overall (including the initialization of the Spark, the data extraction, etc.).

While the loading of the data and the data extraction run much faster on r5, due to the utilization of 48 cores, when it comes to machine learning that is the most computational intensive part, the FPGA-accelerated cores can achieve up to 3x speedup compared to the multi core.

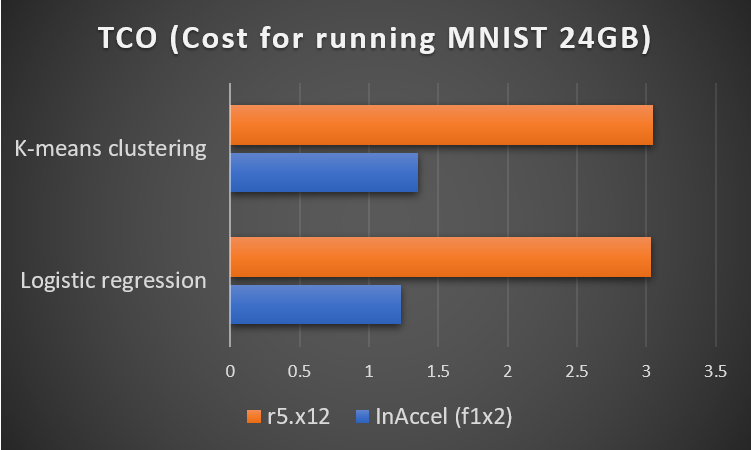

The data scientist can enjoy not only higher speedup but also they can reduce the TCO and keep happy the CFO of their company. In the performance evaluation, we compared the Accelerated ML suite against the r5.x12large instances that cost the same as the f1.x2large plus the prices for the InAccel IP cores ($3/hour). Using the InAccel Accelerated ML suite for Apache Spark not only you can achieve up to 3x speedup but you also reduce the cost by 2.5x. If you application was running for 2.5 hours and costs $7.5, now you can achieve the same results in 1 hour (2.5x speedup) and the total cost will be $3/hour.

Try now the InAccel ML suite and get 3x speedup and 2x lower TCO without changing you code. Is there any pitfall? Currently, the Accelerated ML suite can support up to 1024 features for Logistic regression and Kmeans and up to 32 classes/centroids. The architect in the FPGA is optimized to handle up to these features for now. Soon, we are going to release the new framework that is fully customizable that can support higher number of features and classes.