FPGAs goes serverless on kubernetes

Serverless frameworks (e.g., AWS Lambda, Azure Functions, Google Cloud Functions, and Kubeless) let you deploy your code (functions) in the cloud without having to consider the underlying infrastructure. With serverless computing developers reach production faster and benefit from greater scalability, flexibility, easier deployment and most importantly from lower cost. Serverless functions relieve you from the increased effort required to purchase, set up, provision, maintain and manage a cloud cluster and the only thing you need to worry about is how to improve your product and services.

However, today’s workloads are changing, they become more demanding and computer science is seeking for more performance in a smaller power budget. To this end, FPGAs arise as a very promising solution and deliver significantly increased performance at a lower cost compared to traditional CPUs. Hence, FPGA acceleration becomes indispensable and cloud providers are already adopting this approach and offer FPGA devices in their cloud portofolio (e.g., AWS, Nimbix, Alibaba etc).

Therefore, at InAccel we came with a question: “Why should exploiting FPGA acceleration should be any different than the increased flexibility and benefits of Serverless Computing? Why the developers are relieved from provisioning and maintaining cloud infrastructures but they have to worry about the performance of their functions?”

Our response to that question is to seamlessly integrate FPGA acceleration in Serverless Computing. As a result, with only two minor modifications, developers will be able to exploit the combined benefits of Serverless FPGA Accelerated Functions:

● Include InAccel’s accelerator library in your function

● Ask for FPGA resources when deploying your function

Note that InAccel supports both Intel and Xilinx FPGAs and hence, you can find accelerators suitable for your application in InAccel’s, Xilinx’s, as well as Intel’s accelerators portfolios.

FPGA Accelerated Kubeless

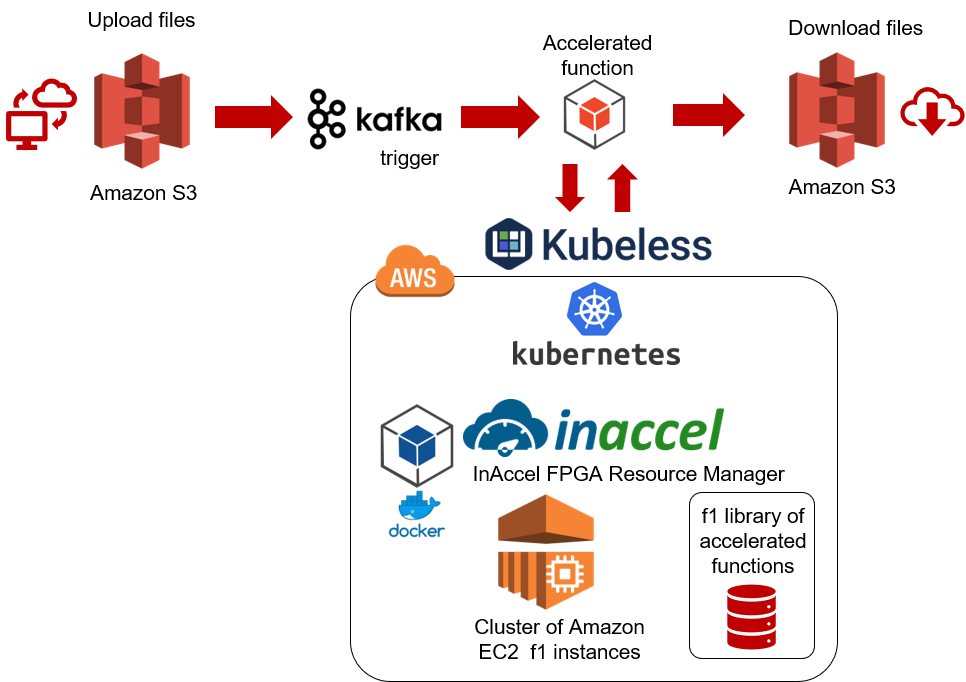

As a case study to demonstrate the benefits of an FPGA Accelerated Serverless Framework we used AWS F1 instances (VMs with FPGAs) and Kubeless (a Kubernetes-native serverless framework).

We made a simple example using Xilinx’s accelerators on AWS for image processing (edge detection and affine processing). The users only have to upload the images on the S3 bucket and then InAccel’s FPGA manager is automatically deployed, process the data and then store back the results on the S3 bucket. The users do not have to know anything about the FPGA execution.

FPGA-based accelerations using InAccel’s FPGA manager on serverless deployment

Here are the steps for the serverless deployment of FPGAs using InAccel’s FPGA resource manager.

Step 1: Deploy an Amazon EKS (Managed Kubernetes Service) clusterspecifying the F1 instances for the kubernetes worker nodes.

eksctl create cluster — region=us-east — node-type f1.2xlarge

Step 2: Deploy InAccel’s FPGA manager named Coral.

kubectl create -f inaccel-coral-fpga-manager-daemonset.yaml

InAccel’s FPGA Manager daemonset detects the FPGA resources of every node (FPGA number and type: Intel, Xilinx) and updates each node’s information so that requests for FPGAs can be scheduled to the nodes with the available FPGA resources. Then, Coral acts as a node manager for the FPGA resources. When Kubernetes pods (functions) ask for FPGA accelerators, Coral receives the acceleration requests, and is responsible for scheduling their execution in the available FPGAs, programming the FPGAs, and transferring data from/to the pods (functions) to/from the accelerators.

Step 3. Deploy Kubeless

kubectl create -f kubeless-v1.0.3.yaml

Step 4. Import InAccel library in your function.

Java example: import com.inaccel.coral.*;

InAccel’s library contains several accelerated functions (e.g., K-Means, Logistic Regression, Edge Detection etc) and by simply calling our functions an accelerated execution is triggered. For example, by simply calling edgedetection(inputImage, outputImage) the edge detection kernel will be loaded in the FPGA, the input image will be transferred to the FPGA and the output image of the edge detection will be transferred back to the application.

Step 5. Deploy your application and request for FPGAs.

kubeless function deploy inaccel-affine — cpu=1 — memory=2G — runtime java1.8 — handler EdgeDetection.inaccelEdgeDetection — from-file EdgeDetection.java — dependencies pom.xml — dryrun -o json | \

jq '.spec.deployment.spec.template.spec.containers[0].resources.requests += {“xilinx.com/fpga”: 1}’ | \

jq ‘.spec.deployment.spec.template.spec.containers[0].resources.limits += {“xilinx.com/fpga”: 1}’ | kubectl create -f -

Since out-of-the-box Kubeless supports requesting only for CPU and memory resources, you just have to patch the request produced by Kubeless (before submitting it to Kubernetes) and specify also the requested FPGA resources.

Step 6. Relish seamless FPGA acceleration at the ease of Serverless Computing!!!

Αt InAccel we aim at seamless acceleration and we are continuously extending our accelerators portfolio as well as the supported computing frameworks in order to make FPGA acceleration mainstream.

Do not hesitate to contact us at info@inaccel.com to ask for more detailed information regarding our FPGA Accelerated Kubeless and try it out at AWS.

Georgios Zervakis,

Solution Cloud Architect, InAccel, Inc.